Audit and risk management are really two perspectives or “flavors” of the same measurement and inspection processes. In blogs of October and November 2018, I’ve discussed some of the key aspects of these processes and offered some arguments for the benefit of their integration to offer executive management a sharper picture of their true risk position and the effectiveness of controls in place. If you’ve not read them, please look back for some of the foundational arguments assumed here.

Articles abound today in Forbes, in the Wall Street Journal, from large consulting firms, and business schools, (i.e., Accenture, Deloitte, and Kellogg) addressing artificial intelligence (AI) and its impact upon risk management. Some talk about the risks of AI technology, others about how AI will enrich risk management. They all offer insight to technological directions and strategies well suited to large firms with ample financial and human talent resources dedicated to taking leadership actions in this emerging arena. To be frank, we are all surrounded by emerging AI of different capabilities in different places. Staff working at home, surrounded by “smart” appliances, voice activated devices, and new TV’s to name a few are all capable of “seeing” and “listening” to the activity around them; gathering this data and storing it in some provider’s cloud. This is just one example. Mobile devices are another source of AI “opportunity”.

So, what’s a company to do when they do not have the robust financial and talent resources to acquire and apply AI tools and services to their risk management efforts right now? They take advantage of tools in place, thoughtfully applied, to gain some measure of closure against these possibilities. AI is in large measure about the ability to churn through endless mountains of data to find obscure but significant trends or associations that would otherwise be difficult to discover. It’s also about machine learning, which also involves massive but efficient data churning and pattern detection and response. AI is not magic. Often, at its roots, it’s not even science, but algorithmic muscling through these data stores. Well, there is some science in specialized hardware like LIDAR, (Light Detection and Ranging) used in smart cars, but we’re not going there here.

If you have a GRC platform solution in place for risk assessment and management, accompanied by or (better) integrated with an effective internal audit process, you have the foundations of data stores needed to address some of these newer threat and risk vectors potentially impacting your business. This affords you the opportunity to use data analysis tools help you achieve some very useful and directive results for your risk program. If your current GRC doesn’t incorporate such tools there are numerous OTC solutions available.

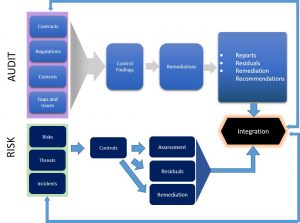

Basic integration of Risk and Audit at the Results Level

Good reporting answers business questions leading to actionable direction. Some of the key questions to be explored for any cyber risk management program would include these:

- What threats pose the greatest risk to our business?

- What have we done to reduce the risk posed by these threats?

- Are these controls in force and effective? How do we know?

- Have we done enough? What is our risk appetite?

- Are we compliant with commitments in place through regulation and contractual obligation?

- Do we have the resources to assure risk is managed within our needs?

- What processes are in place to identify and manage incidents or breaches when they occur?

The data combined from risk management and audit processes is not the complete set of information a risk solution aided by AI analysis processes could handle. There are external factors, such as marketplace data, client or customer data, financial and competitive inputs that might also impact comprehensive inputs to threat or risk identification. But the pair of audit and risk can provide a strong basis for threat identity and risk management. You’ll be able to know what the state of threat identification is within your enterprise. How well current controls address risk mitigation will be clear to see. Risks remaining in place with no apparent management strategy should also be straightforward to identify. Sharing these data with your executive team will enable clear, well informed decisions regarding your current risk position.

The risks and threats associated with the use of AI operationally can be addressed within standard enterprise risk management (ERM) frameworks if you treat the use of AI technologies within your firm as just one more potential source of risk. The core processes of identification, response, remediation, and such apply to risks associated with threats and attacks employing AI technologies, whether they originate from the operational use of AI by your enterprise, or from attacks enabled through its use by threat actors. This doesn’t mean you should avoid employing AI powered risk technologies when you can, but need to understand fully what they might do, how they work, and how to manage any inherent bias in the algorithms they employ.

Commercial products are often designed to meet a wide swath of market and industry needs. To do that, some assumptions are likely made about how those businesses behave, how their customers interact, what transactions are most “common”, and what threats are most likely present. These assumptions naturally establish some biases that can often be tuned through configuration, but still impact the results of any data analysis. So, employing such tools needs to include a thorough review of risks associated with the application of AI. Inherit bias in operations or in risk management tools also exists outside the AI sphere, but human intervention, operation, and interpretation of results often counteract those attributes.

One way to integrate audit, security, and cyber risk is through common controls mapping—understanding which activities and controls contribute to more than one operational or regulatory compliance obligation, security best practice, or help thwart a malicious threat. Looking for consistent patterns in these controls, and then in the data resulting from audit findings, the state of remediation efforts, the frequency of discovery of weak or inadequate findings can all point to trends useful to managing your overall enterprise risk posture. While AI risk management tools might help automate some of this analysis, these tools are data consumers, and do not have the ability to apply the inherent human knowledge, comprehension, and experience with the operation and performance of any particular business. For that, experienced human review and interpretation of the data, however arrayed or combined, remains an invaluable contributor to comprehensive data analysis, and the resulting risk identification process.

The common data stores offered by consolidating data from many sources into an AI tool, or through an integrated GRC program with internal and external audit processes, will both lead to opportunity for better informed understanding of enterprise risk and the effectiveness of controls and remediations set forth to address foreseen threats. As always, there are multiple approaches and paths to achieve these informed ends. AI is useful for large businesses with complex and extensive risk footprints and trained staff to manage these tools, and interpret their findings. Firms with less complicated circumstances employing strong audit programs and supported by GRC technologies to help consolidate, integrate, and evaluate results from these program activities may be able to achieve much the same ends. Both approaches benefit from oversight and results interpretation by experienced, knowledgeable people, who can employ what they know to filter inherit bias while taking advantage of the best information before them. Likewise, as a check or control of human bias, consolidated data from multiple sources helps strengthen arguments for new discovery or positions previously downplayed or ignored because of past practice, habit, or management preferences to date.

Together, consolidating, integrated technologies offer better opportunities for sound risk and security management than siloed disciplines reporting separately and operating independently. They help prevent needless duplication and redundancy of effort, reduce operating overhead, and lead to more complete and comprehensive solutions to address weaknesses and confirm strengths in your risk management program. Artificial intelligence, whether employed to support operational business transactions, or to evaluate risk, will grow in utility and reliability as technology matures. Meanwhile, its current state will continue to offer risk opportunities for enterprises to resolve. Creative, thoughtful deployment of well vetted and reliable GRC and audit tools will continue to provide sound risk management information to support enterprise leaders’ informed decisions for some time to come.

About the Author:

Simon Goldstein is an accomplished senior executive blending both technology and business expertise to formulate, impact, and achieve corporate strategies. A retired senior manager of Accenture’s IT Security and Risk Management practice, he has achieved results through the creation of customer value, business growth, and collaboration. An experienced change agent with primary experience in financial, technology, and retail industries, he’s led efforts to achieve ISO2700x certification and HIPAA compliance, as well as held credentials of CRISC, CISM, CISA.